Introducing Provider Ceph: Your K8s Control Plane for Ceph Object Storage

Today, we are excited to introduce a new addition to the Crossplane ecosystem: Provider Ceph. Developed by Akamai, Provider Ceph joins the ever-growing arsenal of Crossplane providers as it aims to become the ultimate Kubernetes control plane for Ceph object storage.

At Akamai, we were tasked with the requirement of managing S3 buckets across multiple, distributed Ceph clusters from within a local Kubernetes cluster. At the core of this system's control plane, alongside dozens of other microservices, sits Provider Ceph. This provider is specifically designed to handle S3 bucket management across multiple Ceph backends in a scalable and performant manner, serving as a vital component in our cloud-native architecture.

In this blog post, we will dive into the motivation behind developing Provider Ceph and why we felt it necessary to build our own solution. We'll explore how Crossplane emerged as the perfect framework for our needs and how we leveraged its existing constructs and utilities to craft Provider Ceph. Additionally, we'll discuss the unique twists and customizations we introduced to address our specific challenges.

Ultimately, our goal is to share our journey of creating a Crossplane provider capable of scaling to manage hundreds of thousands of S3 buckets across multiple Ceph backends. So let’s get stuck into the intricacies of Provider Ceph and its role in our cloud-native architected object storage system!

Meeting the Challenge: Requirements and Vision

As we considered an approach to developing an object storage system orchestrated by Kubernetes, we had several critical requirements. Despite Kubernetes being responsible for orchestration of the overall system, ultimately the object storage would exist on multiple Ceph clusters external to the Kubernetes cluster. Therefore, we required a component, capable of bridging the gap between these two environments. Each S3 bucket had to be represented by a Kubernetes Custom Resource (CR), serving as the definitive source of truth for that bucket. Our solution also had to seamlessly integrate into an event-driven architecture, reconciling the desired state with the distributed realities of our Ceph clusters in an eventually consistent model.

Moreover, S3 operations needed to be asynchronous across backends to maintain performance. For example, bucket creation and deletion are time-consuming operations, necessitating concurrent operations across all backends. This asynchronicity introduced complexity; once a bucket was created on any backend, it had to be considered ready for use. Conversely, a bucket could only be deemed deleted once removed from all backends. Additionally, our component needed to regularly monitor the health of each backend to maintain the overall system's robustness and reliability.

Furthermore, performance and scalability were paramount considerations from the outset. With the potential for hundreds of thousands of buckets and corresponding CRs, the system had to be equipped to handle such volume while implementing guardrails to ensure optimal performance.

Empowered by Crossplane: Crafting Provider Ceph

Given the background of our developers, the glaringly obvious solution to our problem was a Kubernetes operator. With requirements for a Kubernetes CR as the source of truth and a controller that fits an event-driven architecture and eventual consistency model, this approach seemed like a natural fit. The spectrum of applications of Kubernetes operators and controllers has broadened over the years, with many examples going beyond basic functionality and extending the scope of object reconciliation beyond the local Kubernetes cluster. Instead, operators are often utilized as intermediaries between a Kubernetes cluster and an external system, reconciling objects beyond the reach of the Kubernetes API. While this type of operator is not uncommon, we asked ourselves before diving headfirst into development: could there be a better way? Is there something out there that could make our lives as developers a little bit easier as we aim to meet a series of complicated requirements with a familiar pattern?

Enter Crossplane. While maintaining the core principles of a Kubernetes operator, Crossplane offers a framework with some very neat utilities and constructs to facilitate a more seamless developer experience. Its extensible architecture and support for custom resources and controllers provided us with the flexibility to implement our solution while leveraging familiar Kubernetes paradigms. Additionally, Crossplane's emphasis on infrastructure as code and its ability to facilitate cross-environment communications made it an ideal choice for bridging the gap between Kubernetes and our external Ceph clusters.

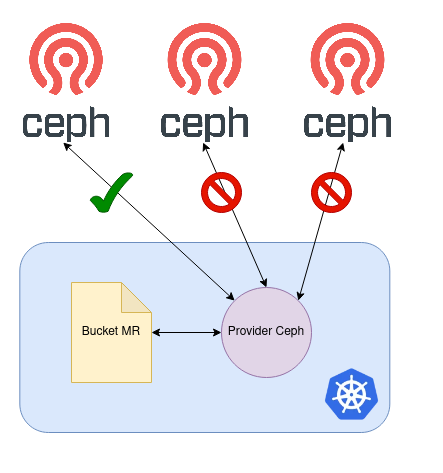

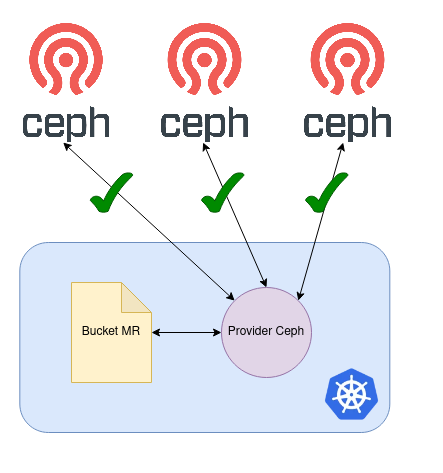

By utilizing Crossplane's robust concepts of ProviderConfigs and SecretRef, we were able to deviate slightly from a Crossplane provider’s more conventional use case of managing resources on a cloud provider, to managing S3 buckets across multiple Ceph clusters. For our implementation, each Ceph cluster finds representation through a ProviderConfig object, leveraging a Kubernetes Secret containing credentials specific to that cluster. Moreover, every S3 Bucket is encapsulated as a CR - or Managed Resource (MR) in Crossplane speak.

Out of the box, our new provider arrived equipped with a skeleton controller designed for Observe, Create, Update, and Delete operations on these bucket MRs. This setup ensured a definitive Kubernetes representation and source of truth for our buckets, coupled with an event-driven control loop adhering to an eventually consistent model. All with the means and utilities to seamlessly communicate with external APIs. And just like that, Provider Ceph was born!

Now, let's delve into the implementation phase and explore the myriad functionalities that Crossplane has in store for us.

Navigating Complexity: Leveraging Crossplane Conditions

Reflecting on our earlier goal of signaling a bucket as available once created on a single backend, we discovered that Crossplane's existing Condition types of Ready and Synced perfectly aligned with our requirements for an eventual consistency model.

When a bucket is successfully created on a single backend, it is considered ready for immediate use. This allows users to utilize the bucket while Provider Ceph continues to work in the background, attempting to create the bucket on all other backends.

kubectl get bucket test-bucket

NAME READY SYNCED EXTERNAL-NAME AGE

test-bucket True False test-bucket 10sThis example shows a bucket MR reconciled by Provider Ceph. Initially, an S3 bucket is successfully created on a single Ceph cluster. As a result, the bucket MR is considered Ready, but not yet Synced. This can be seen in the kubectl output. Until the bucket MR becomes Synced, reconciliation continues to occur in the background, with Crossplane runtime's built-in exponential back-off mechanism ensuring that sluggish Ceph clusters are not overwhelmed with S3 requests.

kubectl get bucket test-bucket

NAME READY SYNCED EXTERNAL-NAME AGE

test-bucket True True test-bucket 40sOnce the S3 bucket is successfully created on all backends, the bucket MR’s Conditions transition to being both Ready and Synced.

As we have discussed, Provider Ceph must have the ability to perform S3 operations asynchronously across multiple Ceph backends. Executing this effectively hinges on efficient tracking and representation of the success and failure of these operations - a task made possible by Crossplane's concept of MR Conditions. There are a number of areas where Provider Ceph makes use of Crossplane Conditions. Beyond the basic status update demonstrated above, Conditions serve as structured, machine-readable messages that communicate more nuanced information about resources managed by Crossplane controllers. This includes any errors, warnings, or informational messages that a platform engineer might require. Traditionally, Crossplane providers have used Conditions to provide insights into the general state of a managed resource.

Provider Ceph extends upon this concept by not only displaying the overall condition of the bucket MR but also presenting individual conditions for the corresponding S3 buckets on each backend. The example below shows the status of a bucket MR that was successfully created on two of three Ceph clusters. In such cases, it's crucial for platform engineers to be informed about the specifics of the failure, a requirement neatly addressed through the use of Conditions providing the when, where and why.

status:

atProvider:

backends:

ceph-cluster-a:

bucketCondition:

lastTransitionTime: "2024-02-12T14:57:27Z"

reason: Available

status: "True"

type: Ready

ceph-cluster-b:

bucketCondition:

lastTransitionTime: "2024-02-12T14:57:27Z"

reason: Available

status: "True"

type: Ready

ceph-cluster-c:

bucketCondition:

lastTransitionTime: "2024-02-12T14:57:32Z"

message: 'failed to perform head bucket: operation error S3: HeadBucket, request canceled, context deadline exceeded'

reason: Unavailable

status: "False"

type: Ready

conditions:

- lastTransitionTime: "2024-02-12T14:57:32Z"

reason: Available

status: "True"

type: Ready

- lastTransitionTime: "2024-02-12T14:57:14Z"

reason: ReconcileError

status: "False"

type: SyncedNot shown in this example are Conditions of a bucket’s sub-resources for each backend, which are also supported by Provider Ceph and are represented in the MR status. This provides another layer of visibility into the bucket state. Initial support for bucket sub resources includes bucket lifecycle configurations.

Additionally, Provider Ceph leverages Conditions in its health-check controller. This controller implements a generic Reconcile function, akin to a standard Kubernetes operator, periodically sending PutObject and GetObject requests to designated health-check buckets on each Ceph backend. These requests serve as a useful smoke test to assess the health and reachability of the Ceph cluster, with the result of each health check represented in the ProviderConfig status through - yet again - a Condition, albeit tailored to our specific use case. Below we can see that the status of the Ceph cluster’s ProviderConfig, on which bucket creation has failed, has become unreachable.

status:

conditions:

- lastTransitionTime: "2024-02-12T15:07:24Z"

message: 'failed to perform head bucket: operation error S3: HeadBucket, https response error StatusCode: 500, request send failed, "http://ceph-cluster-c.ceph-svc/ceph-cluster-c-health-check":

dial tcp: lookup ceph-cluster-c.ceph-svc on 10.96.0.10:53: server misbehaving'

reason: HealthCheckFail

status: "False"

type: ReadyDriving Efficiency

In the context of Provider Ceph's event-driven, eventually consistent model within a Kubernetes control loop, one potential concern is the strain on the system caused by MR reconciliation, especially at the scale we aim to achieve. To address this challenge, Provider Ceph has leveraged the Pause feature, introduced in Crossplane v1.14, and designed an innovative option called "auto-pause".

The Auto-Pause feature, enabled with Provider Ceph's command line flag --auto-pause-buckets, automatically pauses any bucket MR that has achieved the Ready and Synced Conditions. As a result, these bucket MRs will no longer undergo reconciliation unless explicitly instructed by the user. With auto-pause enabled, users are responsible for un-pausing a bucket MR before making any updates or deletions. Fortunately, Provider Ceph intelligently re-pauses the bucket MR after the update has been reconciled successfully and it once again becomes Ready and Synced, should this be the desired outcome. More details on how to enable auto-pause can be found in Provider-Ceph’s documentation.

Likewise, it is also possible to enable or disable auto-pause on an individual bucket MR basis, overriding the global flag. This streamlined approach significantly reduces the number of reconciliations in a real-world system supporting hundreds of thousands of buckets, enhancing efficiency and scalability while also affording flexibility to the user.

Provider Ceph also makes use of an internal caching system designed to streamline bucket management by minimizing the reliance on querying the RADOS Gateway (RGW). By maintaining a synchronized cache of bucket metadata, Provider Ceph enhances efficiency, responsiveness, and resource utilization for what could otherwise become a considerable bottleneck when operating at scale.

Staying Secure

In order to provide safe connections to your Ceph backends, Provider Ceph offers a flexible authentication option with the use of the Security Token Service (STS) AssumeRole action for enhanced security. As mentioned earlier, Provider Ceph defaults to the utilization of a single Kubernetes Secret per Ceph cluster containing the necessary credentials to communicate with that Ceph cluster.

However, users can optionally configure Provider Ceph to leverage STS AssumeRole for added security, fetching temporary credentials associated with the assumed role. This functionality operates seamlessly: Along with its default S3 client, Provider Ceph creates an STS client per Ceph cluster using the same aforementioned Secrets. This STS client is then utilized prior to any S3 operation to perform an AssumeRole request. The AssumeRole response provides temporary credentials which are then used to establish a new ephemeral S3 client with which to perform the operation. To enable AssumeRole authentication, users simply set the assume-role-arn flag. This flag holds a value for a role Amazon Resource Name (ARN) which is a required field for the AssumeRole request.

Moreover, Provider Ceph supports a "bring-your-own" authentication service model, allowing redirection of AssumeRole requests to a bespoke service. The address of this service must be specified in the ProviderConfig’s STSAddress field. The only requirement of the custom service is that it implements the AssumeRole API. Beyond this, the user is free to implement whatever logic they deem necessary within their custom authenticator service. To facilitate this flexibility further, the bucket MR is equipped with an AssumeRoleTags option to facilitate metadata transfer from the MR to the AssumeRole request. This metadata is then available for interpretation by the user’s custom authentication service. See our documentation to learn more about how authentication is handled in Provider Ceph.

Summary and Call to Action

Provider Ceph is still at the very early stages of its journey, but much progress has been made in a relatively short time. Through leveraging Crossplane's powerful concepts and utilities, Provider Ceph provides a transparent, scalable, performant, and secure Kubernetes control plane for managing S3 buckets across multiple Ceph backends.

While our use case may be specific to our needs, there may be others in the community seeking a similar solution for managing their Ceph clusters. We invite developers and users to explore Provider Ceph, provide feedback, and contribute to its development on GitHub, as well as engage with the Crossplane community. Additionally, we're excited to announce that we'll be speaking about Provider Ceph at the upcoming KubeCon EU 2024 in Paris. This talk will include a fascinating peek behind the curtain at the measures we have taken to achieve massive scale in bucket management - a topic not discussed in this blog post. We encourage readers to join us for further discussions on enhancing cloud-native storage management with Provider Ceph.