Please note that we recently announced Terrajet, a code generation framework designed to generate Crossplane providers from Terraform.

Terrajet uses a different approach than the one presented in this post. We highly suggest checking it out to learn more about how we are leveraging Terraform to generate Crossplane providers today.

At the heart of the Crossplane effort, are the Crossplane Providers. Providers are like Kubernetes controllers which install into Crossplane and add CRDs for various cloud services and infrastructure. With the exception of the Equinix Provider, the vast majority of our Providers have been built for cloud infrastructure. A top request from the community was to enable hybrid cloud use cases, and specifically to add support for VMware infrastructure.

Today we’re announcing the latest effort from the Crossplane community - provider-terraform-vsphere. This new Provider has been built using a new code generation tool found in the terraform-provider-gen repository.

Members of the Crossplane community who work at AWS and Azure have pioneered using code generation to bootstrap new Crossplane providers in their ACK and k8s-infra projects. However, given the amount of diverse infrastructure Crossplane can orchestrate, we knew another approach to bootstrapping was needed.

Building on top of Terraform

While considering the options for generating Crossplane controllers, it became clear the biggest obstacle to a general solution is the huge amount of variation across the SDKs and metadata formats that providers use to describe their APIs. Within a given provider, there can also be significant variation in API semantics across services, due to years of evolution under pressure to maintain backwards compatibility for each service.

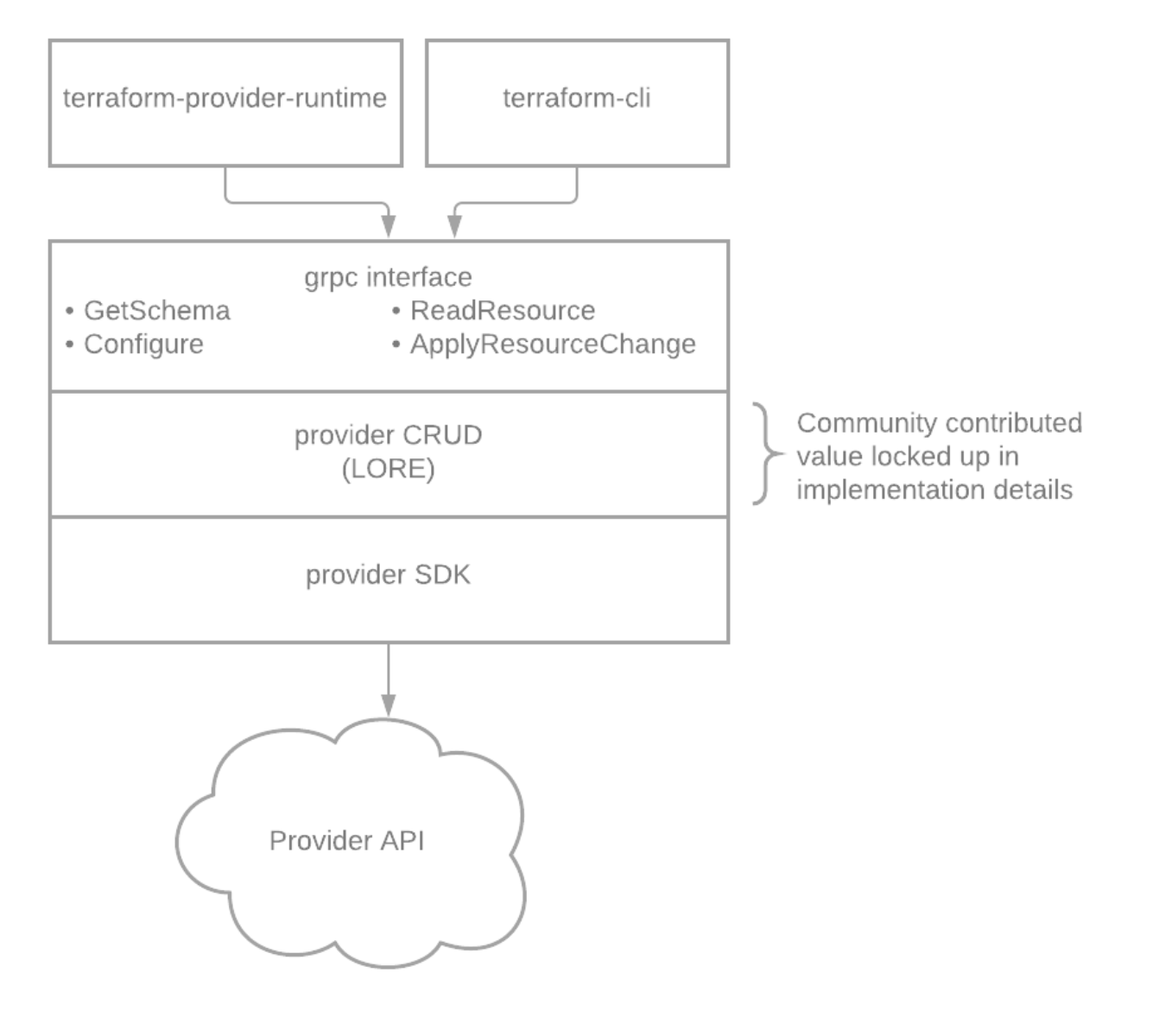

Since our team had experience as Terraform users and contributors, we knew Terraform Provider libraries represent quite a deep reserve of community knowledge, with contributions from countless individual developers, as well as employees of the providers themselves and Hashicorp. At a library level, we knew that a CRUD interface hides all the complexity of (often hand-written) code to deal with each provider and service on a case-by-case basis. A deep dive into the relationship between the terraform executable and provider plugins turned up an exciting opportunity for crossplane to access this CRUD interface in a generic way.

How Terraform Works

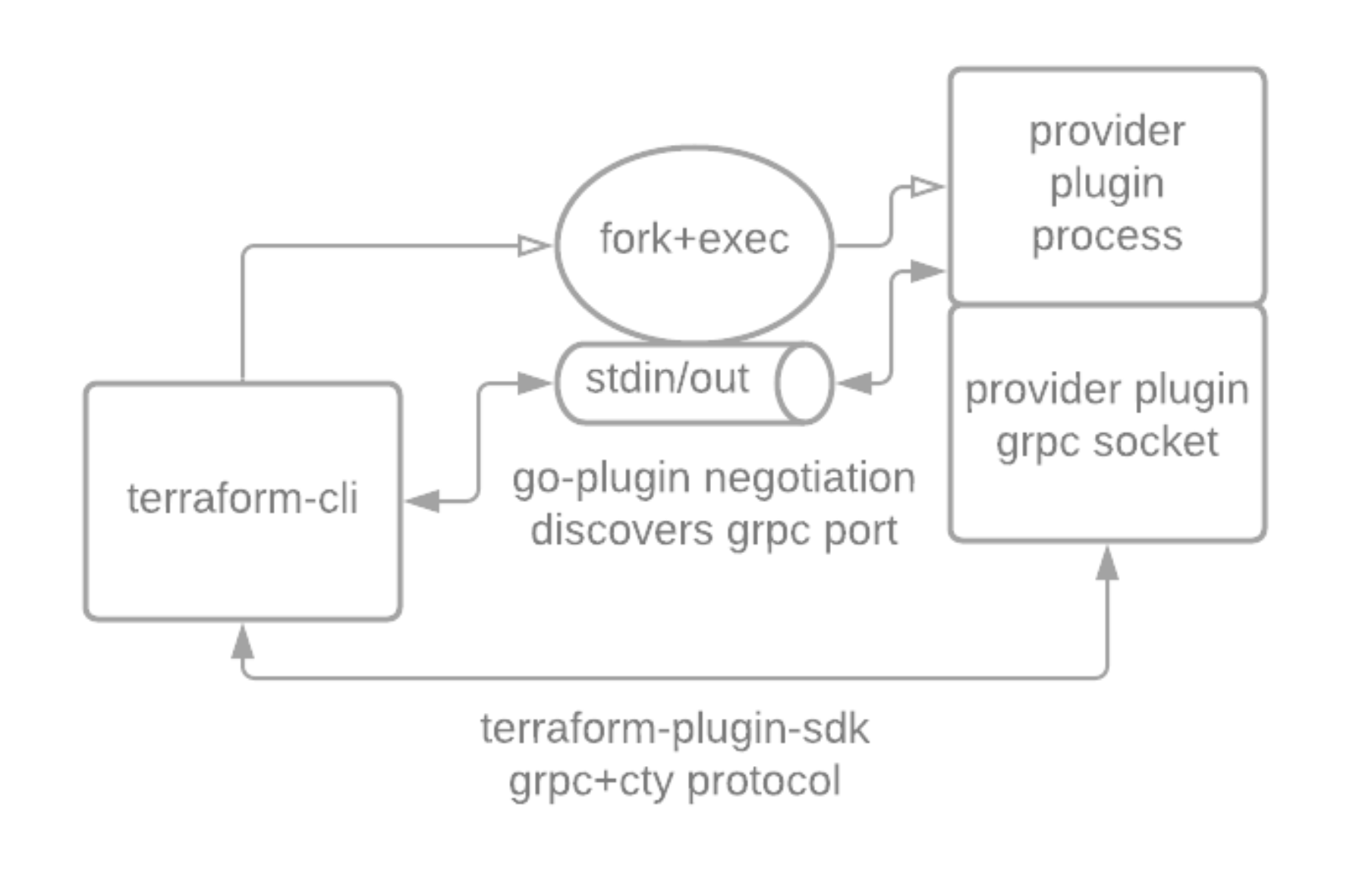

For some background, we’re going to look at what happens when you execute an "apply" command in Terraform:

Terraform providers are installed as separate binaries, downloaded from the terraform registry when terraform initializes. When terraform needs to interact with a provider of a specified version, it picks an appropriate binary and starts the provider as a sub-process, using an unofficial negotiation protocol to determine the port where a grpc server started by the sub-process is listening. This GRPC server implements a set of RPC methods that provide metadata that the terraform cli uses to validate resources, and methods to configure client credentials and plan/apply HCL resource changes.

The methods are fairly generic; for instance resources are represented by a DynamicValue type (wire format spec for the curious). To work with these APIs, our generated code needs to extensively interact with the go-cty type system, which models objects (blocks) and attributes in terraform resource schemas, as well as representing values for input into the RPC serialization layer. Our goal is to interact with the provider binary in a manner that resembles the terraform cli, while also adhering to the Crossplane resource.Managed, ExternalConnector and ExternalClient interfaces.

In an attempt to isolate common functionality from provider-specific details, the design was broken up into 2 layers. terraform-runtime implements a generic ExternalClient and ExternalConnector. At initialization, an Implementation is registered for each resource supported by the provider. The Implementation enables the ExternalClient to operate on resource.Managed types, isolating it from concrete code-generated resource implementations. We’ll start by looking at how terraform-runtime fulfills the crossplane-runtime interfaces before taking a closer look at how terraform-provider-gen completes the picture by generating Implementations for each resource in a provider.

IaC to Control Plane Translation

Thanks to Crossplane’s model of continuous reconciliation and granular, resource-level infrastructure management, we can forgo all the complex graph processing and diffing logic typically associated with terraform. The generic ExternalClient methods interact with an internal api package that presents a CRUD interface. The CRUD methods fulfill their API by constructing and invoking terraform provider grpc requests (ReadResource/ApplyResourceChange) under the hood. The key to getting the right behavior from terraform is setting ApplyResourceChangeRequest.PriorState/PlannedState to null or desired/observed state, depending on the method:

- Create PriorState=null, PlannedState=encoded managed.Resource Spec

- Update PriorState=Observe managed.Resource, PlannedState=encoded managed.Resource Spec

- Delete PriorState=encoded managed.Resource Spec, PlannedState=null

Not only does Crossplane eschew the challenges of diffing and graph comprehension, we also bypass the terraform state file mechanism, instead representing state in resource.Managed Custom Resources. One way we do this is adopting and repurposing the “id” attribute that all Terraform resources specify for use as a unique id in the terraform state file. This is the identifier that is used by the ReadResource method (which we invoke via Read). Part of the resource.Managed spec is an annotation, crossplane.io/external-name, which serves the same purpose as the terraform resource id. terraform-provider-gen filters the id field out of the generated schema, and generated encoding methods transparently fill in the value of the id field with the external-name annotation on the terraform side, whereas the decoder stores the value of the id field in the external-name annotation on the Kubernetes side.

While terraform-runtime maps between the crossplane-runtime execution model and terraform grpc methods, the missing pieces, specific to a provider+resource Implementation, are filled in by code generated by terraform-provider-gen:

- go types representing the resource schema, used by controller-runtime and angryjet code generation tools to generate methodsets necessary to fulfill kubernetes client needs and the resource.Managed interface, as well as Kubernetes CRD YAML

- translate a resource.Managed to a cty.Value, for serialization to terraform

- decode a cty.Value to a resource.Managed, for deserialization from terraform

- compare 2 versions of a resource, for late initialization, and to drive the generic ExternalClient state machine (should it create, update or delete the resource?)

The provider’s GetSchema GRPC method provides a representation of the resource schema, modeled using the go-cty type abstract type system, layered into the following go types:

- Block: An outer container for an object

- Attribute: A field in the object, with important metadata for code generation like the Type of the field, and whether it is computed, or required or optional

- NestedBlock: Resources can be arbitrarily nested, and NestedBlocks differentiate a nested object from other types of attributes

The ProviderConfig is also represented by a configschema.Schema object, allowing us to generate these go types as well. Rather than tightly coupling our code generation pipeline to this structure, we work in roughly 2 passes. The intermediate format in the middle of the 2 layers is a type system that roughly approximates go structs and attributes, with a single recursive union type called Field, which can either be a struct or a fied, and holds other fields to represent nested structs or, in the case of a struct, subfields. Each field has a set of callbacks that yield the method to encode, decode or compare that specific field, and generate calls to invoke the equivalent operations on their child Fields. This enables us to recursively build up the encode/decode/compare call graph in generated code without unwieldy nesting.

We use the jen code generation library for generating the go types that represent resources as Kubernetes CRDs (processed by controller-gen and angryjet). For the encode/decode/compare methods, we found that stock go templates were more legible. Our go templates make heavy use of the ability to invoke a method on a template argument, with each Field recursively triggering the rendering of the functions for its child Fields in this fashion. Recursion is the name of the game in the code generation process, but the resulting code is decidedly non-recursive and hopefully easy to follow. For instance if you have a Block Foo with a NestedBlock Bar and Attribute Baz, the resulting encode.go would look like this:

func (e *ctyEncoder) EncodeCty(mr resource.Managed, schema *providers.Schema) (cty.Value, error) {

r := mr.(*Foo)

return EncodeFoo(*r), nil

}

func EncodeFoo(r Foo) cty.Value {

ctyVal := make(map[string]cty.Value)

EncodeFoo_Bar(r.Spec.ForProvider.Bar, ctyVal)

en := meta.GetExternalName(&r)

ctyVal["id"] = cty.StringVal(en)

return cty.ObjectVal(ctyVal)

}

func EncodeFoo_Bar(p Bar, vals map[string]cty.Value) {

valsForCollection := make([]cty.Value, 1)

ctyVal := make(map[string]cty.Value)

EncodeFoo_Bar_Baz(p, ctyVal)

valsForCollection[0] = cty.ObjectVal(ctyVal)

if len(valsForCollection) == 0 {

vals["bar"] = cty.ListValEmpty(cty.EmptyObject)

} else {

vals["bar"] = cty.ListVal(valsForCollection)

}

}

func EncodeFoo_Bar_Baz(p Bar, vals map[string]cty.Value) {

vals["baz"] = cty.BoolVal(p.Baz)

}By unfolding the tree processing into discrete functions per-value, and always passing by reference, the code generator is able to make assumptions about how values will be passed through at each level and remain relatively simple. For a more complete example of what generated code looks like for a real resource type, check out the vSphere VirtualMachine resource: types.go, decode.go, encode.go, compare.go. With the Implementation interface fulfilled, plus some additional generated boilerplate to wire everything up for initialization, we’ve got a provider!

The result of all this tooling is that no custom code specific to the vSphere provider needs to be written in order to support all of its resource types. We are building a workflow where adding a new provider is as simple as copying a template repository, filling out a yaml file with metadata about the provider, and running go generate. The result is still highly experimental, and there are many rough edges to work on and bugs to uncover, which is where I’d like to ask anyone who currently manages this class of vSphere resources with terraform to kick the tires and give us some feedback on the experience. Just be careful, because this still hasn’t run against a real vSphere instance! Shout out to vcsim and kind for enabling us to test without leaving the comfort of localhost.

Huge shout out to everyone out there in the open source community who has made this work possible. Thank you to Upbound for the willingness to take a risk on such a gnarly endeavor, Hashicorp for blazing this trail and the generosity to build terraform in open source, and the terraform contributors who have built up an amazing library of code to normalize a raging sea of irregular infrastructure APIs.